For one of the projects, I needed a PowerShell script to find duplicate files in the shared network folder of a file server. There are a number of third-party tools for finding and removing duplicate files in Windows, but most of them are commercial or are not suitable for automatic scenarios.

Because files may have different names but identical content, you should not compare files by name only. It is better to get hashes of all files and find the same ones among them.

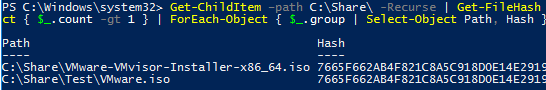

The following PowerShell one-liner command allows you to recursively scan a folder (including its subfolders) and find duplicate files. In this example, two identical files with the same hashes were found:

Get-ChildItem –path C:\Share\ -Recurse | Get-FileHash | Group-Object -property hash | Where-Object { $_.count -gt 1 } | ForEach-Object { $_.group | Select-Object Path, Hash }

This PowerShell one-liner is easy to use for finding duplicates, however, its performance is quite poor. If there are many files in the folder, it will take a long time to calculate their hashes. It is easier to compare files by their size first (it is a ready file attribute that doesn’t need to be calculated). Then we will get hashes of the files with the same size only:

$file_dublicates = Get-ChildItem –path C:\Share\ -Recurse| Group-Object -property Length| Where-Object { $_.count -gt 1 }| Select-Object –Expand Group| Get-FileHash | Group-Object -property hash | Where-Object { $_.count -gt 1 }| ForEach-Object { $_.group | Select-Object Path, Hash }

You may compare the performance of both commands on a test folder using the Measure-Command cmdlet:

Measure-Command {your_powershell_command}

For a folder containing 2,000 files, the second command is much faster than the first (10 minutes vs 3 seconds).

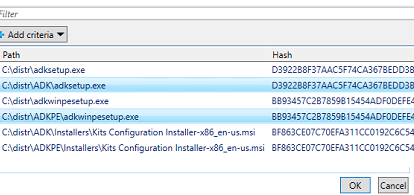

You can prompt a user to select duplicate files to be deleted. To do it, pipe a list of duplicate files to the Out-GridView cmdlet:

$file_dublicates | Out-GridView -Title "Select files to delete" -OutputMode Multiple –PassThru|Remove-Item –Verbose –WhatIf

A user may select files to be deleted in the table (to select multiple files, press and hold CTRL) and click OK.

Also, you can replace duplicate files with hard links. The approach allows to keep files in place and significantly saves disk space.

param(

[Parameter(Mandatory=$True)]

[ValidateScript({Test-Path -Path $_ -PathType Container})]

[string]$dir1,

[Parameter(Mandatory=$True)]

[ValidateScript({(Test-Path -Path $_ -PathType Container) -and $_ -ne $dir1})]

[string]$dir2

)

Get-ChildItem -Recurse $dir1, $dir2 |

Group-Object Length | Where-Object {$_.Count -ge 2} |

Select-Object -Expand Group | Get-FileHash |

Group-Object hash | Where-Object {$_.Count -ge 2} |

Foreach-Object {

$f1 = $_.Group[0].Path

Remove-Item $f1

New-Item -ItemType HardLink -Path $f1 -Target $_.Group[1].Path | Out-Null

#fsutil hardlink create $f1 $_.Group[1].Path

}

To run the file, use the following format of the command:

.\hardlinks.ps1 -dir1 d:\folder1 -dir2 d:\folder2

The script can be used to find and replace duplicates of static files (that don’t change!) with symbolic hard links.

Also, you can use a console dupemerge tool to replace duplicating files with hard links.

2 comments

I’m getting an error when I run this code.

$file_dublicates | Out-GridView -Title “Select files to delete” -OutputMode Multiple –PassThru|Remove-Item –Verbose –WhatIf

Out-GridView : Parameter set cannot be resolved using the specified named parameters.

At File location

+ … ublicates | Out-GridView -Title “Select files” -OutputMode Multiple – …

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : InvalidArgument: (:) [Out-GridView], ParameterBindingException

+ FullyQualifiedErrorId : AmbiguousParameterSet,Microsoft.PowerShell.Commands.OutGridViewCommand

From Out-GridView docs: The PassThru parameter of Out-GridView lets you send multiple items down the pipeline. The PassThru parameter is equivalent to using the Multiple value of the OutputMode parameter.

Just remove -OutputMode Multiple and it works.