The RemoteFX vGPU feature can be used in previous versions of Hyper-V (starting with Windows 7/Windows Server 2008 R2) to passthrough a host discrete GPU graphics card to a virtual machine. However, starting with Windows 10 1809 and Windows Server 2019, support for RemoteFX has been removed. Instead, the new Discrete Device Assignment (DDA) feature is proposed.

Discrete Device Assignment allows physical host PCI/PCIe devices (including GPU and NVMe) to be passed through from the host to the Hyper-V virtual machine. Basic requirements for using DDA in Hyper-V:

- Available for Hyper-V Gen 2 virtual machines only;

- The VM must have dynamic memory and checkpoints disabled;

- The physical graphics card must support GPU Partitioning;

- If WSL (Windows Subsystem for Linux) is enabled on the host, a code 43 video error might occur in the VM when passing through the graphics card using GPU-P;

- Although SR-IOV (Single Root Input/Output Virtualization) is not listed in the DDA requirements, GPU passthrough to a VM will not work correctly if it’s not supported.

Discrete Device Assignment is only available on Windows Server with the Hyper-V role. In the desktop Windows 10 and 11, you can use GPU partitioning to share the video card with the virtual machine. This article describes how to assign a physical GPU to a virtual machine on Hyper-V.

Enable GPU Passthrough to Hyper-V VM on Windows Server

Discrete Device Assignment (DDA) allows PCIe devices to be passed through to a virtual machine on Windows Server 2016 and newer.

Before assigning the GPU to the VM, you must change its configuration.

Disable Hyper-V automatic snapshots for VM (described in the post Manage VM startup and boot order on Hyper-V):

Set-VM -Name munGPUVM1 -AutomaticStopAction TurnOff

Configure cache and memory limits for 32-bit MMIO space:

Set-VM -Name munGPUVM1 -GuestControlledCacheTypes $True -LowMemoryMappedIoSpace 3Gb -HighMemoryMappedIoSpace 33280Mb

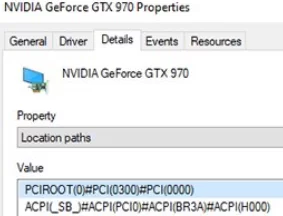

Next, find out the physical path to the graphics card’s PCIe device on the Hyper-V host. To do this, open the GPU Properties in Device Manager, go to the Details tab, and find the Location Paths property. Copy the value that starts with PCIROOT.

Or use PowerShell to get this path value:

Get-PnpDevice | Where-Object {$_.Present -eq $true} | Where-Object {$_.Class -eq "Display"}|select Name,InstanceId

Disable this graphics card on the Hyper-V server in Device Manager or using PowerShell:

Dismount-VmHostAssignableDevice -LocationPath "PCIROOT(0)#PCI(0300)#PCI(0000)" –force

Now connect the physical host GPU adapter to the virtual machine:

Add-VMAssignableDevice -VMName VMName -LocationPath "PCIROOT(0)#PCI(0300)#PCI(0000)"

Then power on the VM and check that your GPU appears in the Display Adapters section of the Device Manager, along with the Microsoft Hyper-V Video device.

To disconnect the GPU from the VM and connect it to the host:

Remove-VMAssignableDevice -VMName munGPUVM1 -LocationPath $locationPath

Mount-VMHostAssignableDevice -LocationPath $locationPath

Sharing the Physical GPU with Hyper-V VM on Windows 10 or 11

GPU Partitioning (GPU-P) is available in Hyper-V virtual machines running Windows 10/11 build 1903 or later.

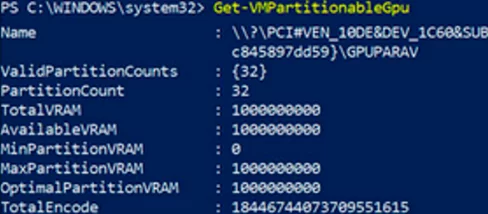

Use the Get-VMPartitionableGpu (Windows 10) or Get-VMHostPartitionableGpu (Windows 11) command to check if your graphics card supports GPU partitioning mode.

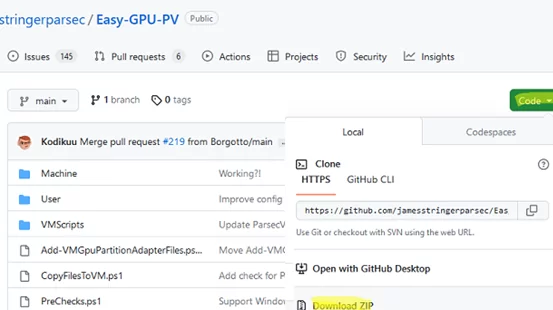

Use the Add-VMGpuPartitionAdapter cmdlet to pass through the video adapter from the host to the VM. Copy the drivers for the graphics card from the Hyper-V host to a virtual machine using the Easy-GPU-PV script (https://github.com/jamesstringerparsec/Easy-GPU-PV).

Download the ZIP archive containing the script and extract it to a folder on the Hyper-V host.

Open an elevated PowerShell console, then allow running PowerShell scripts in the current session.

Set-ExecutionPolicy -Scope Process -ExecutionPolicy Bypass –Force

Run the script:

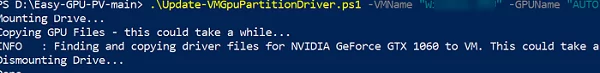

.\Update-VMGpuPartitionDriver.ps1 -VMName myVM1 -GPUName "AUTO"

The script copies the GPU drivers from the host to the VM.

Now change the VM settings and assign the GPU to it:

Set-VM -VMName myVM1-GuestControlledCacheTypes $true -LowMemoryMappedIoSpace 1Gb -HighMemoryMappedIoSpace 32Gb

Add-VMGpuPartitionAdapter -VMName myVM1

If you have updated the video drivers on the Hyper-V host, you must also update them on the virtual machine.

.\Update-VMGpuPartitionDriver.ps1 -VMName myVM1 -GPUName "AUTO"

4 comments

I finally got it working, including the games! I could separate gaming and work on two PCs.

I don’t agree with those who say “Hyper-V is not for graphics”.

On VMware Workstation pro, I experienced frequent crashes, slowdowns and freezes in games and applications such as FreeCad, Slash3D, Blender and Adobe.

Thank the author for writing this!

I`m receiving the followed erro when try to dismount the GPU.

I had take a lot of time searching for ACS at BIOS, withour sucess.

I don`t know how to enable it.

Please, help me.

My mother board is a ROG STRIX Z370-H GAMING, BIOS ver: 2701, no update available.

Dismount-VmHostAssignableDevice : The operation failed.

The PCI Express bridges between this device and memory lack support for Access Control Services, which is necessary for securely exposing this device within a virtual machine.

At line:1 char:1

+ Dismount-VmHostAssignableDevice -LocationPath “PCIROOT(0)#PCI(0101)#P …

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : InvalidArgument: (:) [Dismount-VMHostAssignableDevice], VirtualizationException

+ FullyQualifiedErrorId : InvalidParameter,Microsoft.HyperV.PowerShell.Commands.DismountVMHostAssignableDevice

The motherboard chipset must have: access control services (ACS) on PCI Express root ports

And check that your CPU supports SLAT, Intel VT-d2 or AMD I/O MMU, and EPT (in case of Intel CPU)

I had follow all steps from your guid, but at the and, after the command: Add-VMAssignableDevice -VMName VMName -LocationPath “PCIROOT(0)#PCI(0300)#PCI(0000)”.

I remount the device (GPU) and VM return to normal.

The Following Error ocurring:

How can I solve these problem?

Couldn’t start virtual machine

5:18:51 PM

Source

Go to Virtual machines.

Type

Error

Message

RemoteException: Failed to start virtual machine W10-AA. Error: ‘W10-AA’ falhou ao inicializar.

Virtual Pci Express Port (ID de Instância 9F2C1609-E884-4435-AFF6-AC765B1A804F): falha ao ligar com o Erro ‘A hypervisor feature is not available to the user.’.

‘W10-AA’ falhou ao inicializar. (ID da máquina virtual BF829271-2322-4396-8CB1-69637772D034)

‘W10-AA’ Virtual Pci Express Port (ID da instância 9F2C1609-E884-4435-AFF6-AC765B1A804F): falha ao ligar com o erro ‘A hypervisor feature is not available to the user.’ (0xC035001E). (ID da máquina virtual BF829271-2322-4396-8CB1-69637772D034)