Tools for creating software RAID arrays from multiple physical disks are built into Windows. First, let’s look at a simple configuration where you need to create a mirror of two physical disks containing data. Next, we will consider how to build a software RAID1 configuration for the boot (system) disk, which contains the bootloader and Windows system files. This configuration ensures that Windows remains bootable and that your data is protected against disk failure.

Modern versions of Windows provide two tools for creating software RAID arrays:

- Classic dynamic disks – allows creating RAID 0 and 1 in Windows 10/11, + RAID5 in Windows Server. They are the subject of this article.

- Modern Storage Spaces – a simple and convenient tool to create a mirror or parity array. Not suitable for boot drives.

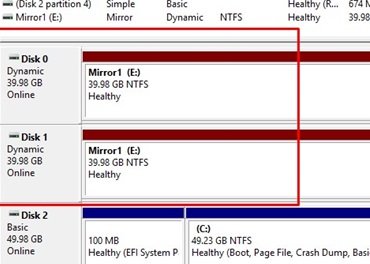

Create a Mirrored Volume with Two Data Disks on Windows

First, let’s look at a simple case where you need to create a mirror (RAID 1) of two data drives on Windows. It is assumed that RAID will join disks other than the system (boot) disk.

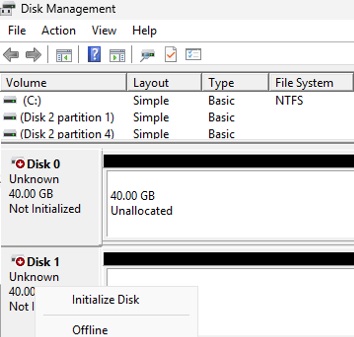

- pen the Disk Management console

diskmgmt.msc. In this example, there are two additional unallocated 40 GB hard disks available on the computer. - Initialize the disks (if you haven’t already)

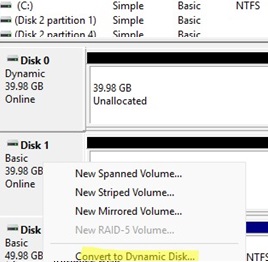

- Click on each disk and convert it to dynamic.

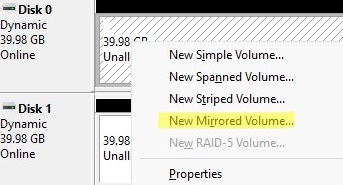

- Now click on the unallocated space and select New Mirrored Volume.

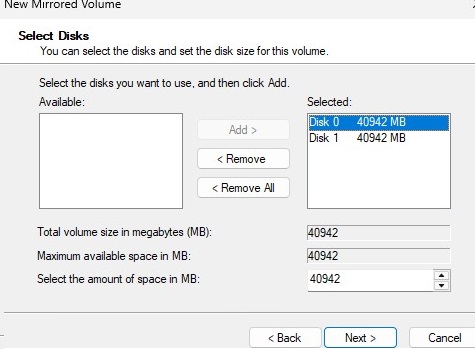

- Add both disks to the mirror:

- Select the drive letter you want to assign to the volume. Then format the volume.

- The result is a software mirror of two disks. It can be accessed using an assigned drive letter (E: in this example).

If a disk in a software RAID 1 array fails, it is removed from the configuration, and a new disk is added to the mirror using the same process. The data on the remaining hard drive will then be automatically synchronized to the new drive.

Configuring a Software RAID1 for the Windows Boot Drive

Now let’s look at a more complex configuration where you need to create a software RAID for a system disk where Windows is installed.

The following configuration is used:

- A UEFI computer running Windows Server 2025 (this could also be Windows 10/11 or the free Hyper-V server).

- The GPT partition table is used on the system disk.

- A new, empty disk of similar size is connected to the computer and used as a mirror of the system disk.

Our task is to create a software mirror (RAID1 – Mirroring) of two hard disks and copy the boot loader configuration so that the computer can boot from any of the disks.

In short, the main steps to take:

- Connect the second disk to the computer

- Create a GPT partition table on the second disk and partitions similar to the first (system) disk.

- Convert both disks to dynamic

- Mirror the disks

- Update the EFI partition and the BCD bootloader on the second disk

- Test boot from first and second hard drives

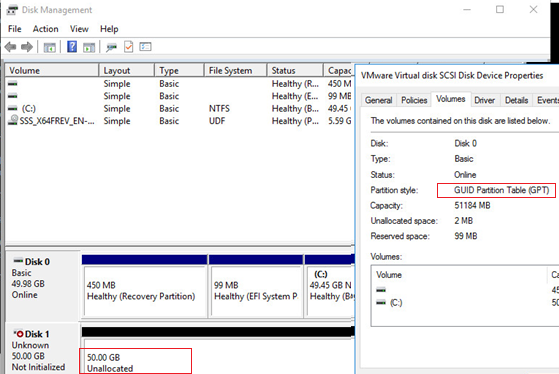

First, open the Disk Management snap-in (diskmgmt.msc) and make sure that the first disk is partitioned with the GPT partition table (Disk Properties -> Volumes -> Partition style –> GUID partition table), and the second one is empty (unallocated).

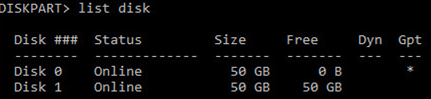

Run the command prompt as an administrator and run diskpart. Type the command:

DISKPART>list disk

Two local disks are available:

Disk 0– a system disk with a GPT partition table where Windows is installedDisk 1– an empty unpartitioned disk

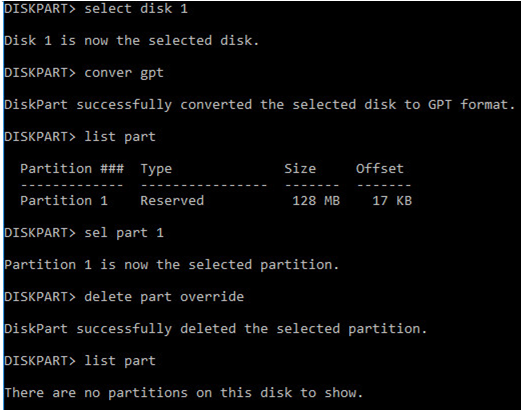

Clean the second disk and convert it into GPT:

select disk 1

clean

convert GPT

List the partitions on the second disk:

list part

If at least one partition is found on Disk 2 (in my example it is Partition 1, with the Reserved label and Size 128 Mb), delete it:

sel part 1

delete partition override

Display the list of partitions on the first disk (Disk 0). Then you need to create the same partitions on Disk 1.

select disk 0

list part

There are 4 partitions on the system disk:

- Recovery – 450MB, a recovery partition with WinRE

- System – 99MB, an EFI partition (more about the partition structure on GPT disks)

- Reserved – 16MB, an MSR partition

- Primary – 49GB, a main partition with Windows image

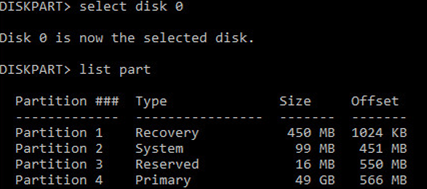

Create the same partition structure on disk 1:

select disk 1

create partition primary size=450

format quick fs=ntfs label="WinRE"

set id="de94bba4-06d1-4d40-a16a-bfd50179d6ac"

gpt attributes=0x8000000000000001

create partition efi size=99

create partition msr size=16

list part

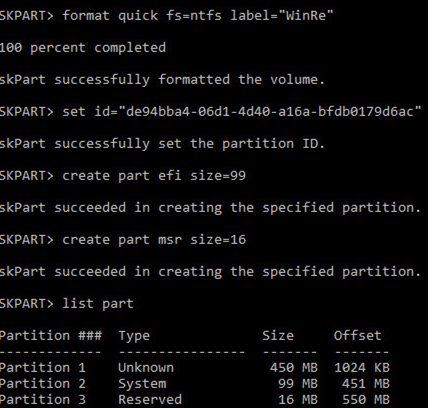

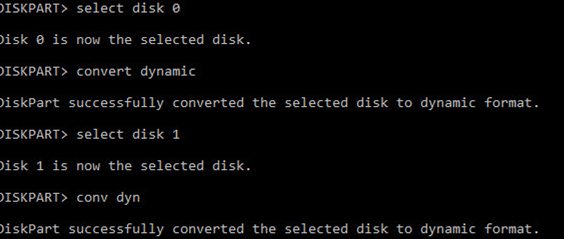

Then convert both disks to dynamic:

select disk 0

convert dynamic

select disk 1

con dyn

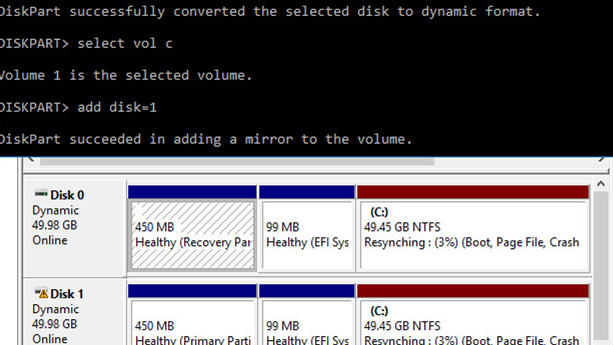

Create a mirror for a system drive (drive letter C:). Select a partition on Disk 0 and create a mirror for it on Disk 1:

select volume c

add disk=1

The following message will appear:

DiskPart succeeded in adding a mirror to the volume

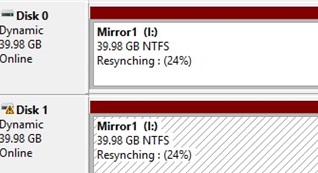

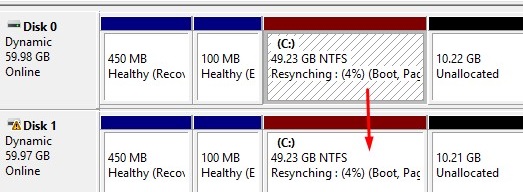

Open Disk Management and make sure that drive C: synchronization has been started (Recynching). Wait for it to finish. Depending on the size of your C: partition, this may take up to several hours.

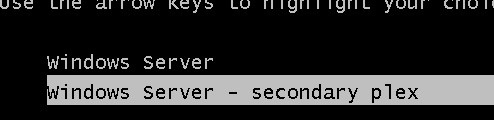

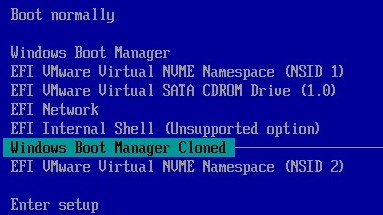

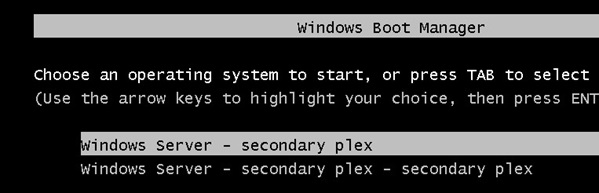

You will now be prompted to select a boot entry from the Windows Boot Manager menu. If you do not manually select a disk, the system will try to boot from the first disk after 30 seconds:

- Windows Server

- Windows Server– secondary plex

At this time, the bootloader configuration is only stored on Disk 0, and if you lose it, you won’t be able to boot the operating system from the second drive (Disk 1). Windows software RAID cannot be used to create a mirrored copy of an EFI partition. Since the EFI partition contains essential boot files, a failure of the first disk will prevent the computer from booting from the second disk unless the EFI bootloader is repaired manually.

Let’s look at how to copy the EFI partition to the second drive and update the boot loader configuration (BCD) so that you can boot Windows from both the first and second drives.

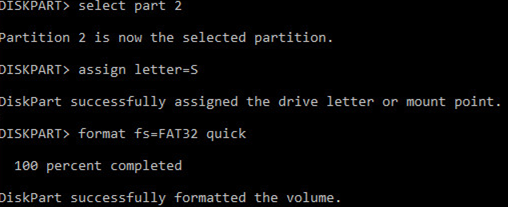

Assign the drive letter S: to the EFI partition on Disk 1 and format it in FAT32:

select disk 1

select part 2

assign letter=S

format fs=FAT32 quick

Then assign the letter P: to the EFI partition on Disk 0:

select disk 0

select partition 2

assign letter=P

exit

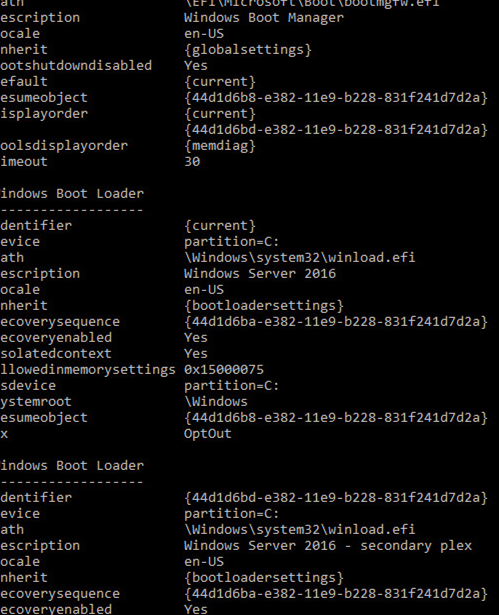

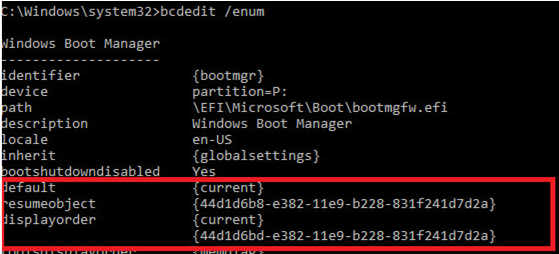

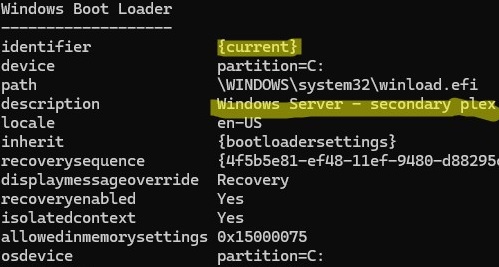

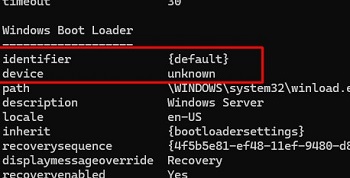

Now you are ready to copy the EFI and the BCD configuration files to the second drive. Display the current BCD bootloader configuration using the following command:

bcdedit /enum

When creating a mirror, the VDS service automatically added the BCD entry for the second mirror disk (labeled Windows Server– secondary plex).

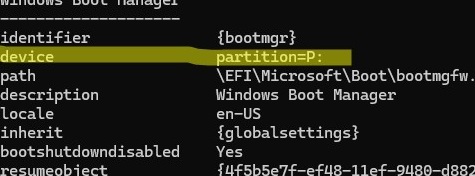

To allow booting from the EFI partition on the second disk if the first disk fails, update the BCD configuration. To do this, copy the current Windows Boot Manager configuration:

bcdedit /copy {bootmgr} /d "Windows Boot Manager Cloned"

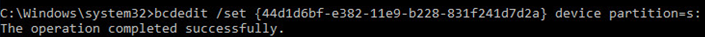

The entry was successfully copied to {44d1d6bf-xxxxxxxxxxxxxxxx}Now copy the resulting configuration ID and use it in the following command:

bcdedit /set {44d1d6bf-xxxxxxxxxxxxxxxx} device partition=s:

If you did it correctly, you will see this message

The operation completed successfully.

List the current Windows Boot Manager (bcdedit /enum) configuration. Note that the boot loader now has two options for booting from EFI partitions on different drives (default and resume object).

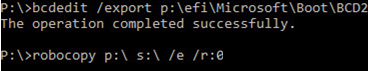

Make a copy of the BCD store on the EFI partition of the first disk (Disk 0). Copy the files to the second disk (Disk 1):

P:

bcdedit /export P:\EFI\Microsoft\Boot\BCD2

robocopy p:\ s:\ /e /r:0

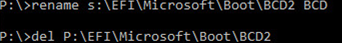

Rename the BCD store on Disk 1:

Rename s:\EFI\Microsoft\Boot\BCD2 BCD

Delete the BCD copy on Disk 0:

Del P:\EFI\Microsoft\Boot\BCD2

Remove drive letters from EFI partitions in diskpart:

sel vol p

remove

sel vol s

remove

To boot from the bootloader on the second disk (for example, if the first one fails), you need to enter the UEFI boot menu (when rebooting the device) and select the bootloader entry on the second disk Windows Boot Manager Cloned.

Then use the Windows Server– secondary plex entry in the BCD loader to boot the operating system.

To find out which disk you are booting from, run the command

bcdedit

Replacing a Failed Drive in a Software RAID1 Mirror on Windows

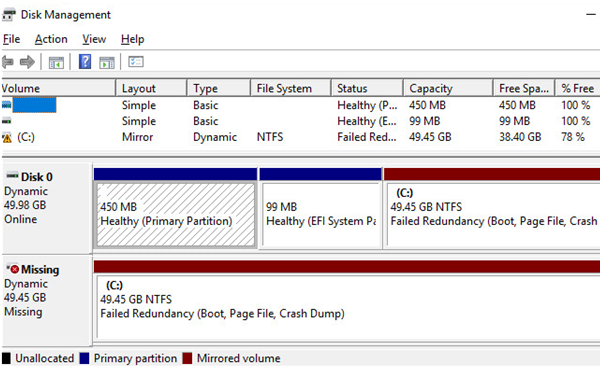

Let’s take a look at how to properly replace a failed disk in a software RAID on Windows. If one of the disks fails, you will see the Failed Redundancy message in the Disk Management snap-in.

In this case, you must replace the failed drive, remove the mirror configuration, rebuild the software RAID, and update the bootloader.

Let’s say in our example: Disk 0 failed (the source disk from which we performed the synchronization). At boot time, in the UEFI method settings, select to boot from the Windows Boot Manager cloned entry.

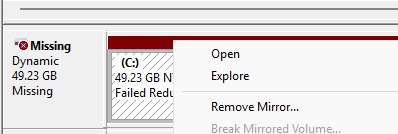

Boot up without the new hard drive and remove the old RAID 1 configuration. As you can see, one of the disks in the mirror has the status Missing.

Click on the disk and select Remove Mirror.

Install a new healthy disk and repeat all the steps of creating partitions, converting the new disk to dynamic, and adding it to the mirror. Don’t forget to assign drive letters to the EFI partitions. Data sync should begin.

Now delete the old entries from the boot loader. Print the current configuration (in cmd.exe ):

bcdedit /enum

Since we have replaced the first (!!!) disk, delete the {default} entry:

bcdedit /delete {default}

Update the bootmgr config:

bcdedit /set {bootmgr} device partition=p:

Then copy the EFI partition to the new disk as described above. As a result, another p plex lex entry is added to the bootloader (it can be renamed using bcdedit).

This configuration with software RAID in Windows for a UEFI computer provides data protection against failure of any of the drives. However, if you are replacing a failed hard disk, you will need to spend some time to manually update the partition table and the bootloader configuration.

13 comments

bcdedit /copy {bootmgr} /d “Windows Boot Manager Cloned”

This command did not work

Fehlermeldung:

Der angegebene Kopierbefehl ist ungültig.

Führen Sie “bcdedit /?” aus, um die Befehlszeilenunterstützung aufzurufen.

Falscher Parameter.

“default and resume object” is “normal boot” option and “resume from hibernate” option

You must use “bcdedit /enum all” to get all possible boot loader configs

Hello,

If one gets an error when doing “add disk=1” (something cryptic about free extents), one can do a single command “extend”, and try “add disk=1” again. Happened on Server 2019.

Thanks for the amazing guide.

To amend my previous comment:

*After* selecting the C volume (sel vol c),

In response to the cryptic error when “add disk=1”, do:

shrink desired=10

extend

So, shrink by 10MB first, then extend

Also, I advise to then run:

retain

This poorly documented thing does *something* to the volume, which saves you from “bcdboot” and “bcdedit” errors, if you need to run these.

“retain” seems to solve the following:

bcdboot:

“BFSVC Error: Failed to set element application device. Status = [c000000bb]” (yes, this can happen even with the volumes squeaky clean)

“BFSVC: BCD Error: Failed to convert data for element”

“failed to populate bcd store”

bcdedit /set {default} device partition=C: (cryptic error about unsupported operation)

Lastly, if you have several EFI partitions, like in this mirror case, you might get the following when using bcdedit:

“The boot configuration data store could not be opened” “the requested system device cannot be identified”

The solution is: Assign a drive letter to the desired EFI partition, as explained above, and then:

bcdedit /sysstore driveletter_p:_s:_or_whatever

Please save yourself my 2 days 🙂

Thanks for the great article. This was exactly what I needed.

Cool, thank you for your HowTo! It works with Win2019 also (no problems/errors when doing 100% step by step). Test with missing Drive0 in a VM and test with missing Drive1 successfully.

But there is no different between the first output of “bcdedit /enum” and the second one, or?

Thank you, your guide was easy to follow and worked great on Server 2019! A client’s Supermicro AS-5019D-FTN4 didn’t come with native NVME RAID capabilities, so this satisfied the RAID1 requirement perfectly.

Win Server 2022: “The disk could not be converted to dynamic because security is enabled” (partition security)

No idea where to change partition security.

Windows is crap. Bill Gates is still crap. F**k Windows in all of its flavors. Something simple like raid1, which is trivial in Linux, takes a f**king rocket propulsion manual to do in Windows. F**k Windows in all possible ways.

Your tutorial is great, Thanks!!!

But I have a question, why you didn’t copy WinRe from original to clone then where did you get the set id=”de94bba4-06d1-4d40-a16a-bfd50179d6ac” after creating partition WinRE? Thanks

https://learn.microsoft.com/en-us/windows-hardware/manufacture/desktop/configure-uefigpt-based-hard-drive-partitions?view=windows-11

This partition must use the Type ID: DE94BBA4-06D1-4D40-A16A-BFD50179D6AC.

Thank you for the great step by step process for this.

One hiccup I ran into was when I ran the bcdedit robocopy line, the BCD and BCD.LOG files didn’t copy due to being busy with another process. Do I need to manually stop that process so those two files can copy, or do I not need to worry about it. Will it affect the updating or mirror of the drives/volumes? Thanks

Hi !

Very good howto…

How about the recovery partition on the second disk?

Should’nt it be managed with reagentc /enable?

Is so, how?